Decompositional reconstruction of 3D scenes, with complete shapes and detailed texture of all objects within, is intriguing for downstream applications but remains challenging, particularly with sparse views as input. Recent approaches incorporate semantic or geometric regularization to address this issue, but they suffer significant degradation in underconstrained areas and fail to recover occluded regions. We argue that the key to solving this problem lies in supplementing missing information for these areas. To this end, we propose DP-Recon, which employs diffusion priors in the form of Score Distillation Sampling (SDS) to optimize the neural representation of each individual object under novel views. This provides additional information for the underconstrained areas, but directly incorporating diffusion prior raises potential conflicts between the reconstruction and generative guidance. Therefore, we further introduce a visibility-guided approach to dynamically adjust the per-pixel SDS loss weights. Together these components enhance both geometry and appearance recovery while remaining faithful to input images. Extensive experiments across Replica and ScanNet++ demonstrate that our method significantly outperforms SOTA methods. Notably, it achieves better object reconstruction under 10 views than the baselines under 100 views. Our method enables seamless text-based editing for geometry and appearance through SDS optimization and produces decomposed object meshes with detailed UV maps that support photorealistic Visual effects (VFX) editing.

Our novel method facilitates the decompositional neural reconstruction with generative diffusion prior. By leveraging the generative prior, we optimize both the geometry and appearance of each object alongside the reconstruction loss, effectively filling in missing information in unobserved and occluded regions. Furthermore, we propose a visibility-guided approach to dynamically adjust the SDS loss, alleviating the conflict between the reconstruction objective and generative prior guidance.

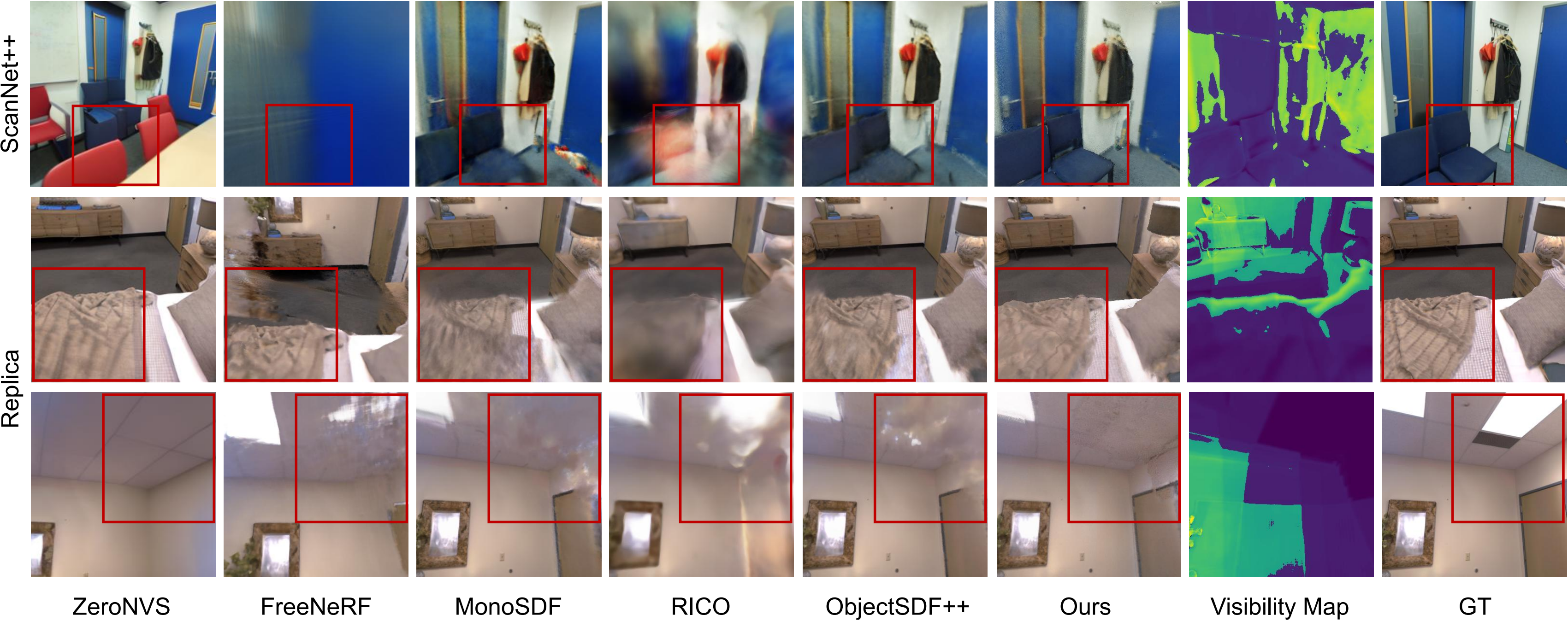

Examples from Replica and ScanNet++ demonstrate our model produces higher quality reconstructions compared with the baselines. Our results achieve more accurate reconstruction in less captured areas, more precise object structures, smoother background reconstruction, and fewer floating artifacts.

Our appearance prior also provides reasonable additional information in sparsely captured regions, leading to higher-quality rendering in these areas compared to the artifacts observed in the baseline results.

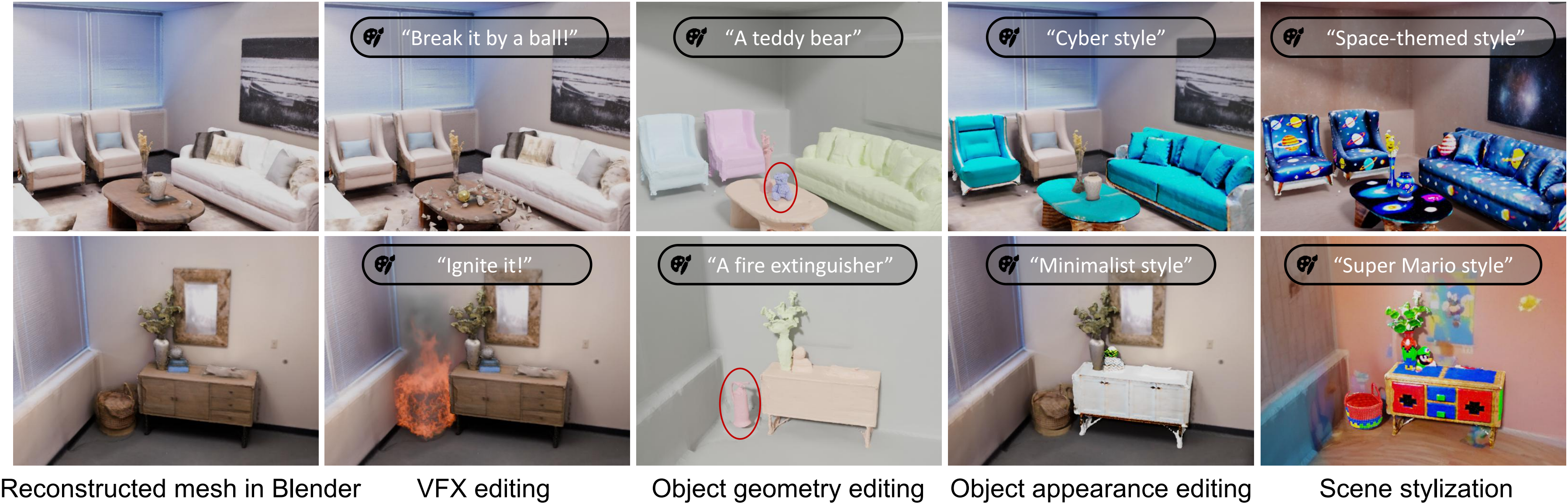

Our method enables seamless text-based editing for geometry and appearance through SDS optimization and produces decomposed object meshes with detailed UV maps that support photorealistic VFX editing.

By transforming all objects in the scene into a new, unified style, we can easily generate a new scene with the same layout but different appearance, which could be beneficial for the Metaverse.

Our model exhibits strong generalization to in-the-wild scenes, such as YouTube videos, achieving high-quality reconstructions with detailed geometry and appearance using only 15 input views. link to scene1, link to scene2 and link to scene3.

@inproceedings{ni2025dprecon,

title={Decompositional Neural Scene Reconstruction with Generative Diffusion Prior},

author={Ni, Junfeng and Liu, Yu and Lu, Ruijie and Zhou, Zirui and Zhu, Song-Chun and Chen, Yixin and Huang, Siyuan},

booktitle=CVPR,

year={2025}

}